Excerpt§

Learn how to eliminate data redundancy and improve storage, performance, and accuracy. Discover strategies like normalization, data deduplication, centralization, validation, verification, and regular audits to prevent errors, inconsistencies, and confusion in your data systems.

Data redundancy refers to the storage of duplicate or overlapping data in multiple places. It is an undesirable outcome that can negatively impact systems in various ways. By applying techniques like normalization, deduplication and centralization, organizations can effectively identify and eliminate redundant data.

Understanding Data Redundancy§

Data redundancy occurs when the same data is replicated in different locations such as databases, spreadsheets and other repositories. Some common causes include:

- Multiple data sources containing overlapping information

- Duplication of records during migration or integration

- Repeating groups and attributes in poorly normalized databases

- Copying data across systems without cleansing

Excess redundancy increases storage and maintenance costs. It also heightens risks of data inconsistency, errors and anomalies when duplicates get updated. Eliminating redundancy is crucial for optimizing storage, performance and accuracy.

Identifying Data Redundancy§

Typical symptoms of data redundancy include:

- Multiple records representing the same entity or event

- Repeating groups or columns in tables

- Identical data elements stored in different systems

- Volume of duplicated or stale data

Carefully analyzing schemata, queries, reports and statistical distributions helps uncover redundancy. Data profiling tools can automatically flag duplicates and redundancy issues for investigation.

Effects of Data Redundancy§

Data redundancy creates several data quality challenges:

Increased Storage - Extra capacity is needed to maintain redundant data

Inconsistencies - Updates may cause duplicates to diverge over time

Errors - Redundant stale data can propagate inaccuracies

Confusion - Unclear which source is authoritative in redundancies

Harder Maintenance - Any changes require updating multiple systems

These effects can diminish confidence in data and distort analytics.

Strategies to Eliminate Data Redundancy§

Here are 5 key ways to eliminate redundancy:

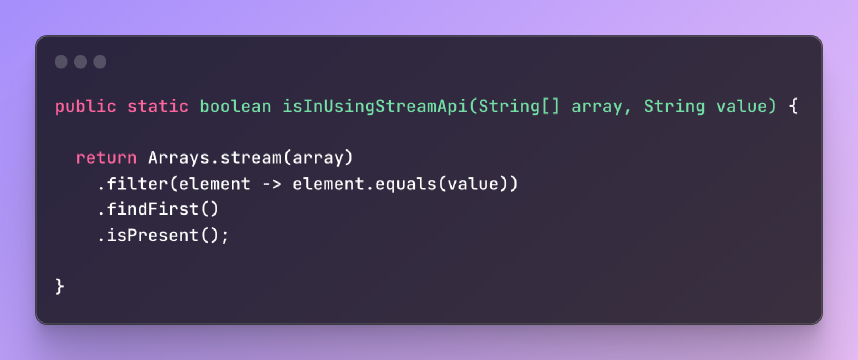

1. Normalization§

Restructuring tables into efficient normalized forms eliminates repeating groups and attributes. This minimizes duplication throughatomic, non-redundant records.

2. Data Deduplication§

Identifying and consolidating duplicate records into authoritative master records improves data quality and consistency.

3. Data Centralization§

Consolidating data into fewer, authoritative systems minimizes scattering and redundancy. Master data management helps centralize domains.

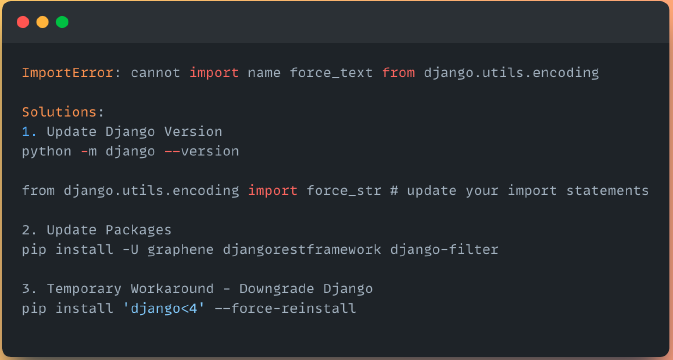

4. Data Validation and Verification§

Input validation and integrity checks help prevent bad duplicated data from entering systems. Post-entry verification identifies existing redundancy.

5. Regular Data Audits§

Periodic redundancy analysis through audits and profiling ensures redundancy is contained through continual data oversight.

Best Practices for Preventing Data Redundancy§

- Institute data governance with policies on redundancy prevention

- Develop clear data models and schemata optimized for normalization

- Integrate quality checks into ETL processes to avoid duplications

- Consolidate multiple systems into centralized repositories

- Schedule regular data audits and deduplication initiatives

- Utilize persistent unique identifiers for records

Conclusion§

Data redundancy has detrimental effects on quality, storage and maintenance. A multi-pronged strategy combining normalization, deduplication, centralization and governance is key to containing redundancy. With careful analysis and planning, organizations can optimize data architectures to eliminate inefficient duplication and improve overall data veracity and reliability.