Excerpt§

Pioneers like Richard Hamming, Irving Reed, Gustave Solomon and Claude Berrou invented the error-correcting code techniques widely used in digital systems today.

Introduction§

Error-correcting codes play a vital role in modern digital communications and storage systems. They enable detecting and correcting accidental changes to data caused during transmission or storage. Without error correction, static, signal noise and other environmental factors would render digital data useless.

The foundations of error-correcting codes were laid in the 1940s and 50s as digital computing emerged. Over decades, various scientists and engineers contributed incremental innovations that led to sophisticated error correction implementations. This article explores the history and pioneering inventors of error-correcting codes.

Error Detection§

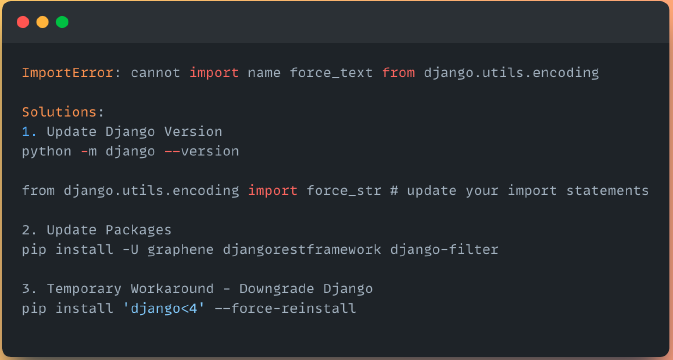

The first step towards error correction is detecting that errors have occurred in the first place. Simple error detection techniques were developed early on:

Parity Bits - The parity bit method involves adding an extra 0 or 1 to a stream of binary data to ensure the total 1s in a block of data is even or odd. The receiving end checks the parity and detects errors if the count is wrong. Parity provides basic error detection but cannot pinpoint or correct the error.

Checksums - Checksums work by calculating a short fixed-length hash value from a block of data. This checksum is appended to the message. The receiving side independently calculates the checksum to detect any differences. Cyclic redundancy checks (CRCs) are commonly used checksum algorithms. However, checksums can only detect errors.

These methods set the stage for true error correction.

Error Correction§

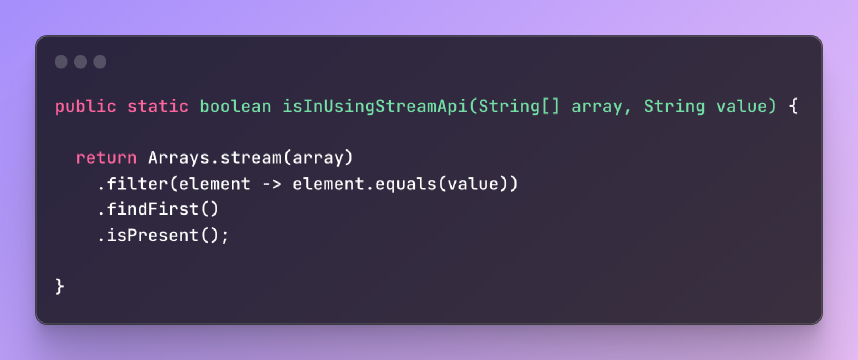

Error correcting codes go beyond just detecting errors - they enable reconstruction of the original data in many cases. A pioneering technique was Hamming codes, invented by engineer Richard Hamming in the 1950s.

Hamming codes work by calculating parity bits across different overlapping bit patterns in data. By cleverly designing the parity calculations, the location of an error could be deduced based on which parity checks fail. This enables flipping the erroneous bit to correct the error. Hamming codes are employed in RAM error correction.

Hamming’s invention demonstrated that error correction is possible by intelligently encoding data with redundant information prior to transmission. This laid the foundation for more advanced schemes.

Reed-Solomon Codes§

In 1960, engineers Irving Reed and Gustave Solomon published an influential paper introducing Reed-Solomon codes. Based on abstract algebra, these codes work by mapping data to points in a multidimensional polynomial. Redundant data points are added so that if a few points are corrupted, the original polynomial curve can still be reconstructed.

Reed-Solomon codes achieve much higher error correction capacity compared to Hamming codes. They can recover data even with high error rates. Reed-Solomon coding plays a key role in storage media, satellite communications, barcodes and other applications. Together with hamming codes, they drove adoption of error correction.

Turbo Codes§

By the 1990s, information theory established theoretical limits on the error correction capacity for a given level of redundancy. Conventional techniques struggled to reach these limits. Claude Berrou and colleagues broke this barrier when they introduced Turbo codes in 1993.

Turbo codes approach the theoretical limit by using an iterative decoding process. Multiple decoders run in sequence to repeatedly refine and improve error recovery. Turbo coding enabled efficient error-free mobile communications. These concepts influenced modern cellular and Wi-Fi standards.

Conclusion§

Pioneering researchers like Hamming, Reed, Solomon and Berrou played an instrumental role in developing the error-correcting codes used pervasively today. Their inventions enabled reliable digital data transmission and storage even in noisy environments.

As technology advances, newer types of errors arise requiring more sophisticated coding schemes. For instance, quantum computing poses threats to cryptographic security. Post-quantum cryptography is an active area being researched.

Error-correcting codes have come a long way since the 1940s but still have much scope for future innovation. As digital systems become ubiquitous, these algorithms only grow more important for robust and reliable data communication and storage everywhere.